How-To

Researchers Release Open Source Counterfactual Machine Learning Library

- By Pure AI Editors

- 03/20/2020

Researchers at Microsoft have released an open source code library for generating machine learning counterfactuals. The PureAI editors talked to Dr. Amit Sharma, one of the project leaders, and asked him to explain what machine learning counterfactuals are and why they're important.

What Are Counterfactuals?

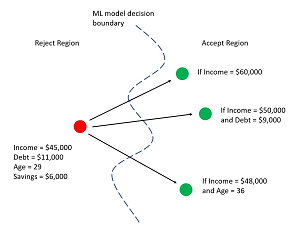

Exactly what machine learning counterfactuals are, and the reasons why they are important, are best explained by example. Suppose a loan company has a trained ML model that is used to approve or decline customers' loan applications. The predictor variables (often called features in ML terminology) are things like annual income, debt, sex, savings, and so on. A customer submits a loan application. Their income is $45,000 with debt = $11,000 and their age is 29 and their savings is $6,000. The application is declined.

A counterfactual is change to one or more predictor values that results in the opposite result. For example, one possible counterfactual could be stated in words as, "If your income was increased to $60,000 then your application would have been approved."

In general, there will be many possible counterfactuals for a given ML model and set of inputs. Two other counterfactuals might be, "If your income was increased by $50,000 and debt was decreased to $9,000 then your application would have been approved" and, "If your income was increased to $48,000 and your age was changed to 36 then your application would have been approved." Figure 1 illustrates three such counterfactuals for a loan scenario.

[Click on image for larger view.] Figure 1: Three Counterfactuals for Loan Application Scenario

[Click on image for larger view.] Figure 1: Three Counterfactuals for Loan Application Scenario

The first two of these counterfactuals could be useful to the loan applicant because they provide information that can be acted upon to get a new loan application approved -- increase income moderately, or increase income slightly and also reduce debt slightly. The third counterfactual is not directly useful to the applicant because they cannot change their age from 29 to 36. However, the third counterfactual, and others like it, could be useful to the developers of the loan application ML model because they provide insights into how the model works.

The loan application example illustrates that there are three main challenges that must be dealt with in order to generate useful counterfactuals. First, the values of the predictors in the counterfactuals should be close to the original input values. For example, a counterfactual of, "If your income was increased to $1,000,000 then your application would have been approved" is likely not very useful. Second, a variety of counterfactuals should be generated. For example, a loan applicant might be presented with several viable counterfactuals and then be able to select the best one for their particular scenario. Third, the counterfactual must make sense within its specific context. For example, a counterfactual that involves changing the age or sex of the applicant is likely not relevant, at least to the loan applicant.

In addition to the three conceptual challenges described above, there are several technical challenges involved with generating counterfactuals. One of these technical challenges is that there can be an astronomically large number of counterfactual patterns with regards to which predictor variables are used. Suppose an ML model has 20 predictor variables. There are Choose(20, 1) = 20 possible counterfactuals that involve changing the value of a single predictor. There are Choose(20, 2) = 190 possible counterfactuals that involve changing 2 of the 20 predictors. Altogether, there are 1,048,575 possible combinations of predictor variables. And if an ML model has 50 predictor variables, there are a total of 1,125,899,906,842,623 possible combinations of predictor variables.

The Diverse Counterfactual Explanations Library

The counterfactuals research effort is detailed in a paper titled "Explaining Machine Learning Classifiers through Diverse Counterfactual Explanations" by Ramarvind K. Mothilal (Microsoft), Amit Sharma (Microsoft), and Chenhao Tan (University of Colorado). The project generated an open source code library called the Diverse Counterfactual Library (DiCE) which is available here.

The library is implemented in Python and currently supports Keras / TensorFlow models, and support for PyTorch models is being added. The researchers applied the DiCE library to the well-known benchmark Adult Data Set where the goal is to predict if a person makes less than $50,000 or more than $50,000 annually based on predictor variables such as education level, occupation type, and race.

A partial code snippet that illustrates what using the DiCE library looks like is:

import dice_ml

d = dice_ml.Data(. .) # load dataset

m = dice_ml.Model(. .) # load trained model

ex = dice_ml.Dice(d, m) # create DiCE "explanation"

q = {'age': 22, 'race': 'White', . .) # model input

# now generate 4 counterfactuals

cfs = ex.generate_counterfactuals(q, 4, . .)

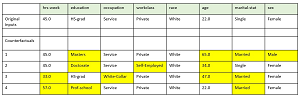

The four resulting counterfactuals are shown in Figure 2. The original inputs are:

- hrs-week = 45

- education = HS-grad

- occupation = Service

- workclass = Private

- race = White

- age = 22

- marital-stat = Single

- sex = Female

The model prediction using the original input values is that the person's income is less than $50,000.

[Click on image for larger view.] Figure 2: Example Counterfactuals from the Adult Data Set

[Click on image for larger view.] Figure 2: Example Counterfactuals from the Adult Data Set

The four counterfactuals all generate a prediction that the similar person has income of greater than $50,000. For example, the first counterfactual changes the values of four predictor variables: education changes from HS-grad to Masters; age changes from 22 to 65; marital status changes from Single to Married; and sex changes from Female to Male.

Notice that the generated counterfactuals are quite diverse. The DiCE library can be tuned for counterfactual diversity and proximity to the original set of predictor values.

What Does It All Mean?

Dr. Sharma noted, "The DiCE library can apply to any ML model. The key requirement is to have a set of features that can be changed. For tabular data, it's easy to use the input features. For deep NN models, it may make sense to use an intermediate layer of the network (e.g., the penultimate layer) rather than the inputs, since the inputs can be high-dimensional and less meaningful to change."

Research in the area of machine learning counterfactuals is quite active. In the short-term, the challenge is to generate feasible counterfactual examples for a ML model. That is, the suggested changes should make sense in the domain. For example, be actionable for a person in the case of credit approval, and in the case of image classification, the modified image should "make sense."

For the medium-term time frame, Dr. Sharma predicted, "I think we'll see ML models that are trained using counterfactual reasoning. Such models will generalizable better to new domains and will also be more explainable by design."

And in the long-term time frame, "I suspect we'll will see counterfactual reasoning being applied to understand the effects of ML systems." For example, A/B tests can only capture near-term effects (approximately one month), but counterfactual-based models may be able to estimate the long-term effect of a new advertising model.

Dr. Sharma commented, "Counterfactuals become more relevant in high-stakes decisions involving people, such as job candidate screening algorithms for hiring, showing job recommendations on LinkedIn ('what profile change would ensure that a job is shown to a user'), predictive policing such deciding which areas to send more police personnel to, and so on."

The PureAI editors asked Dr. James McCaffrey of Microsoft Research what he thought about the new DiCE counterfactual code library. He replied, "I've implemented ad hoc counterfactual generation code from scratch and the process is very time-consuming and quite error-prone. The DiCE library greatly simplifies the process of generating counterfactuals and saves many hours of work. In short, I like DiCE a lot."

Machine learning counterfactuals are closely related to the idea of model interpretability. Each type of ML model has a degree of interpretability. For example, a k-nearest neighbors classification model result is relatively interpretable because the result is based on specific reference data items. For example, "The loan application was declined because the ML model used k = 2 and the two closest reference items to your application are Age = 36, Income = $42,000, etc."

However, some ML models, notably deep neural networks, are black boxes for the most part. For such models, counterfactual reasoning is often the best means of interpreting a specific model result.