Q&A

Prompt Engineering with Azure OpenAI

Prompt engineering grabbed everyone's attention when the nascent discipline started commanding annual salaries north of $330,000 during the dawn of generative AI. The field has since matured, but it remains a critical aspect of working with large language models (LLMs) like those from OpenAI, which are trained on vast amounts of data in order to generate human-like text in response to natural language questions.

With maturation have come more sensible salaries -- and derision from "real" engineers -- but the importance of prompt engineering remains as AI and LLMs like GPT-4, Gemini and Llama 3.1 continue to evolve and expand their roles across industries.

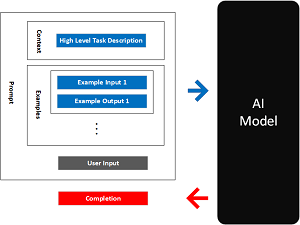

[Click on image for larger view.] A Diagram Capturing the General Pattern for Few-Shot Learning (source: Microsoft).

[Click on image for larger view.] A Diagram Capturing the General Pattern for Few-Shot Learning (source: Microsoft).

Today, reasons to hone your prompt engineering skills include:

- Boost productivity and automate tasks: Prompt engineering enables IT professionals to maximize the efficiency of AI tools in automating tasks such as code generation, debugging, and documentation, improving workflow and saving time.

- Stay competitive with AI-driven tools: As AI becomes integrated into IT workflows (for example, GitHub Copilot, cloud management, DevOps), mastering prompt engineering gives professionals a competitive edge by allowing them to use these tools more effectively.

- Tailor AI solutions to industry-specific needs: IT professionals can customize AI outputs to fit the unique requirements of their industry or organization, whether it's cybersecurity, software development, or system administration.

- Enhance collaboration between humans and AI: Prompt engineering enables more seamless interaction with AI systems, ensuring optimal results and better synergy between human efforts and AI-driven outputs in various IT projects.

- Prepare for future AI roles and opportunities: Mastering prompt engineering offers a gateway to deeper roles in AI, machine learning, and natural language processing, positioning IT professionals for career growth in emerging AI-driven fields.

To help developers, data scientists and IT pros working with the Azure cloud get the most out of the powerful OpenAI system, AI expert Carey Payette will present a session titled "Prompt Engineering 101 with Azure OpenAI" at the November multi-conference Live! 360 Tech Con 2024 event in Orlando. This sprawling event combines multiple conferences targeting developers and IT pros involved with data, AI, security and more.

Payette, a senior software engineer at Trillium Innovations, will teach basic prompt engineering techniques that help guide Azure OpenAI models to better performance for specific use cases, resulting in models that yield more accurate and relevant output. In her 75-minute, introductory-level session, attendees are promised to learn common patterns and strategies to get the most out of an LLM, specifically including:

The anatomy of a prompt

Techniques to shape a prompt to get desired results from an LLM

How to set guardrails to ensure an LLM stays in its lane

In this short Q&A about her upcoming session, Payette shares insights on the key components of a prompt, common patterns and strategies for crafting effective prompts, and how to set guardrails to ensure that an LLM stays within the intended scope. She also discusses the challenges developers face when learning prompt engineering, how to experiment with and iterate on prompts to achieve the best results, and future trends in the field.

Pure AI: What inspired you to present a session on this topic?

Payette: LLMs are trained on a vast amount of information, there is a lot of confusion surrounding the techniques and practices to put in place in order to get the results you are expecting from them.

"Some organizations venture into fine-tuning models to support various scenarios, which is a costly endeavor when many times applying the appropriate prompts will yield the expected results."

"Some organizations venture into fine-tuning models to support various scenarios, which is a costly endeavor when many times applying the appropriate prompts will yield the expected results."

Carey Payette, Senior Software Engineer, Trillium Innovations

Some organizations venture into fine-tuning models to support various scenarios, which is a costly endeavor when many times applying the appropriate prompts will yield the expected results.

Why is prompt engineering still a thing at this stage of LLM development? Why can't the models just optimize the prompts themselves?

Prompt engineering is still very critical when dealing with LLMs today. A well-crafted prompt provides the ability to guide a model into the correct format, tone, and structure that a system desires. There are techniques that can be applied to leverage a model to generate better prompts, but this is dependent on ... wait for it... a well-crafted prompt that directs a model on how to write better prompts!

What are the key components that make up the anatomy of a prompt in Azure OpenAI?

The key components of a prompt consist of Instruction (telling the LLM what to do), Context (providing additional information or examples to the LLM), Input data (what the end-user passes in), and Output indicator (further instructions on the desired output of the model).

Can you elaborate on one or two common patterns and strategies for crafting effective prompts for specific use cases?

LLMs tend to struggle on larger complex tasks, the technique of Chain of Thought prompting guides the model to improved results by instructing the model to think things through by breaking down the problem. Another popular technique is ReAct prompting, standing for reason and act. An LLM orchestration can be armed with a set of tools that have the ability to perform actions, such as looking up a record in a database, or searching the web. With ReAct prompting the LLM has the ability to reason through a prompt and use one or more tools at its disposal to build a context and come up with a final answer.

How do you set guardrails to ensure that an LLM stays within the intended scope and avoids generating inappropriate or off-topic responses?

When setting guardrails to ensure the LLM stays in scope and does not hallucinate, there are a couple of different approaches. The first being the temperature sent in as a parameter with the LLM/user prompt exchange. The lower the value, the more deterministic and factual, whereas the higher the number, the more creative a response could be. Therefore depending on your desired outcome, this is an important parameter to set. Another example is to add instruction to the prompt itself that instructs the LLM to use only information provided in the context, and if it does not know the answer, to indicate "I don't know."

What challenges do developers typically face when learning prompt engineering, and how can they overcome them?

When learning prompt engineering it's important to be focused on a specific domain, persona, and behavior. It is not unusual to have a system with multiple "agents" each armed with their own expertise.

Many times multiple prompt engineering techniques are thrown into a single system prompt resulting in confusion from the LLM.

While it's not as much a concern for modern models, it is important to keep in mind the system prompt is counted against the total of the context window, so very long prompts have the danger of using much of the tokens and will stifle the response which must share the remainder of the context window tokens.

Can you share any insights or tips on how to experiment with and iterate on prompts to achieve the best results?

Many tools exist to measure and evaluate the performance of a LLM model/system prompt, including the evaluation capabilities within Azure AI Studio. Azure AI Studio-based evaluations include quantitative metrics for groundedness, relevance, coherence, fluency, retrieval score, similarity, and F1 score.

What future trends do you foresee in the field of prompt engineering and its impact on AI applications?

Generative AI is still evolving at a rapid pace, and as such, the prompt engineering landscape continues to shift along with it. Not only are LLMs becoming more powerful, but they are also supporting additional multi-modal formats. As an example, LLMs can now react to voice prompts and have the ability to identify if the person is yelling or speaking normally. Images can also be parts of an incoming prompt as well as an expected outcome. You can also have an LLM output a generated chart image based on specified data, with a slight change in prompt, it can instead output a fully interactive chart! The future is a very exciting place for the application of generative AI!

Note: Those wishing to attend the conference can save hundreds of dollars by registering early, according to the event's pricing page. "Save $400 when you register by the Super Early Bird deadline of Sept. 27!" said the organizer of the event, which is presented by the parent company of Pure AI.

About the Author

David Ramel is an editor and writer at Converge 360.