How-To

Defending Machine Learning Image Classification Models from Attacks

By adding random noise to an image to be classified, and then removing the noise using a custom neural denoiser, standard image classification models are less likely to be successfully attacked.

- By Pure AI Editors

- 01/05/2021

A research paper titled "Denoised Smoothing: A Provable Defense for Pretrained Classifiers" describes a clever technique to augment an existing image classification model or a commercial model such as those available from Microsoft, Google and Amazon, to make the predictions resistant to adversarial attacks. The paper was authored by H. Salman, M. Sun, G. Yang, A. Kapoor and J.Z. Kolter, and was presented at the 2020 Conference on Neural Information Processing (NeurIPS). See https://arxiv.org/pdf/2003.01908.pdf.

Briefly, by adding random noise to an image to be classified, and then removing the noise using a custom neural denoiser, standard image classification models are less likely to be successfully attacked.

The Problem Scenario

Imagine a scenario where field employees of an elevator company are performing maintenance and discover a part in an elevator that needs replacing. The company has hundreds of different types of elevators, with thousands of different parts. The field employees take a photo of the worn part, upload it to an image classifier model on a company server. The model responds with an identification of the part, and the employees order a replacement.

Other examples of image classification include facial recognition systems, medical imaging diagnosis and so on. Deep neural architectures, such as convolutional neural networks, have made fantastic improvements in image classification. However, it has been known for several years that neural-based image classification models are quite brittle and are susceptible to adversarial attacks. In such an attack, a bad person slightly changes pixel values in an image. The modified image looks exactly the same to the human eye, but the image is completely misclassified by the model.

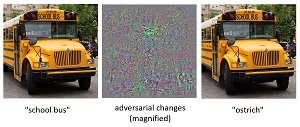

A well-known example was shown in the 2013 research paper "Intriguing Properties of Neural Networks" by C. Szegedy, et al. See Figure 1. When presented with an image of a school bus, a trained model correctly predicts "bus." but by changing some of the pixels slightly, the modified image is classified as an "ostrich."

[Click on image for larger view.] Figure 1: Neural based image classifiers are susceptible to attacks where slight changes in pixel values that are imperceptible to the human eye can produce grotesque misclassifications.

[Click on image for larger view.] Figure 1: Neural based image classifiers are susceptible to attacks where slight changes in pixel values that are imperceptible to the human eye can produce grotesque misclassifications.

It's not likely that an elevator parts image classification system would be the target of an attack. But in problem domains such as medical, military, security, financial and transportation systems, the possibility of adversarial attacks must be considered.

Creating a custom image classification system, such as an elevator parts system described above, is possible but can be time-consuming and expensive. Companies such as Microsoft, Google and Amazon have created generic image classification models where users can upload an image through a web API and get a classification in response.

Custom image classification models and commercial models can be trained from the beginning to be resistant to adversarial attacks. But this approach is very difficult and typically results in models that aren't as accurate as models trained normally. The motivation for the "Denoised Smoothing" research paper was to devise a scheme that could augment a standard image classification model, without modifying it, to make the model more resistant to adversarial attacks.

The Denoised Smoothing Technique

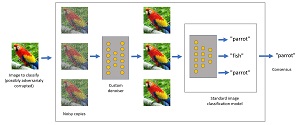

The denoised smoothing technique presented in the research paper is best explained using a diagram. See Figure 2. At the core of the system is a standard neural-based image classification model. The source image to classify, possibly with malicious perturbations, is fed into the denoised smoothing system. Several copies of the source image are created, and random noise is added to each copy. Then each of the noisy copies is denoised using a custom neural denoiser. The custom denoiser is the key to the technique. The denoised versions of the source image are sent to the standard image classifier, and a majority-rule consensus is applied to generate the final classification.

In effect, the noise that is deliberately added to the image to classify overwhelms any perturbations that might have been added by an adversary.

[Click on image for larger view.] Figure 2: The denoised smoothing technique provides a defense for image classification models.

[Click on image for larger view.] Figure 2: The denoised smoothing technique provides a defense for image classification models.

A standard neural denoiser is an autoencoder that that computes an internal compressed representation of a source data item, and then un-compresses the internal representation. The result is a near-copy of the data item where the copy has been cleaned up to remove noise. A standard denoiser works by comparing the computed copy of an item with the original item, and then adjusts the neural weights so that the neural-generated copy closely matches the original. For example, suppose you are working with 9-by-9 pixel grayscale images, where each image represents one of three animals: "ant" (class 0), "bat" (1), or "cow" (2). An original image of a "bat" in a set of training data might be something like:

13, 107, 126, 132, 255, 132, 117, 84, 11, "bat"

A standard denoiser would reduce the pixels of the image to an internal representation of values, such as (0.32, -1.47, 0.95). Then the internal representation would be expanded to a cleaned near-copy that closely matches the original, such as:

10, 100, 120, 130, 150, 130, 110, 80, 10

Notice that a standard denoiser doesn't usually take the class label ("bat") into account.

The custom denoiser scheme described in the Denoised Smoothing research paper works differently than a standard denoiser. The custom denoiser takes the class label into account and adjusts neural weights of the denoiser so that the input pixel values of the image closely match the denoiser-generated pixel values, and also the neural output vector on the original image from the classifier closely matches the neural output from the denoiser-generated images.

Suppose you feed the "bat" input pixel values above to a standard image classifier. The neural output vector will be three values that sum to 1.0 so that they can loosely be interpreted as probabilities, for example (0.20, 0.65, 0.15). The largest pseudo-probability, 0.65, is at index [1] and so the predicted class is [1] = "bat."

When training the custom denoiser, the neural output vector will also be three pseudo-probabilities, for example (0.18, 0.69, 0.12). The weights of the custom denoiser are adjusted so that combination of the neural outputs of the denoiser, and the predictor pixel values, more closely match the outputs of the standard image classifier. Put another way, the custom denoiser accepts noisy images and generates copies that are similar to the pixel values of noisy images themselves, and also the neural output from the standard image classifier that the noisy images produce.

The Pure AI editors contacted H. Salman and G. Yang, two of the authors of the Denoised Smoothing research paper. The authors commented, "The surprising thing was that we were able to smooth out four different public vision APIs using the same denoiser and get non-trivial robustness guarantees from these previously non-robust APIs."

The authors added, "There are two natural next steps to our project: the first is training better denoisers to improve the guarantees we get from denoised smoothing, and the second is reducing the number of queries needed to obtain non-trivial robustness guarantees."

Wrapping Up

The research paper presents the results of many experiments that show the denoised smoothing technique is quite effective against an adversarial attack that perturbs pixel values. Additionally, the paper quantifies how effective the technique is using a concept called certification. Briefly, certification gives a robustness guarantee, i.e., classifiers whose predictions are constant within a neighborhood of their inputs.

Dr. James McCaffrey, who works on machine learning at Microsoft Research in Redmond, Wash., reviewed the Denoised Smoothing research paper for Pure AI. McCaffrey commented, "Many research papers such as those presented at the NeurIPS conference are pure research with no view towards immediate application." McCaffrey continued, "The denoised smoothing paper hits a sweet spot where the problem scenario is important, the ideas presented are theoretically relevant, and the technique can be implemented in practice."