News

At GTC, Nvidia Offers Infrastructure Cures for AI's Scaling Problems

Modern AI systems are under pressure to deliver "inference at scale," said Nvidia CEO and founder Jensen Huang on Tuesday during his GTC conference keynote. Luckily, his company has the hardware for that.

In recent years, GTC -- Nvidia's annual event for showcasing new and upcoming products -- has become a marquee industry conference and a bellwether for AI and cutting-edge technology trends. As Huang noted, the 2025 edition has drawn professionals from a broad swath of industries, including healthcare, transportation and retail, to San Jose, Calif. And, of course, "Everybody in the computer industry is here."

Several factors have made the focus on GTC especially intense this year. It's no secret that generative AI is one of the most disruptive technologies in recent memory, driving a gold rush for the hardware to support its development. However, much of that hardware is made overseas, meaning any tariffs imposed by U.S. President Donald Trump could drive the price of AI-capable silicon skyward. Meanwhile, startup DeepSeek is also rattling industry stalwarts by showing that an open source AI model can be as capable as proprietary ones, even at a fraction of the infrastructure cost.

Blackwell to the Rescue

For his part, Huang was mum about the first issue, but clear about the second. "The amount of computation we need at this point [because of agentic AI and advanced inferencing models] is easily a hundred times more than we thought we needed this time last year," he said in the keynote.

Proto-AI models, Huang argued, were not capable of chain-of-thought reasoning, consistency checking, reinforcement learning or other sophisticated logical processes. Today's models are, and they consume vastly more tokens as a result. AI's current Big Problem is achieving inference at scale -- that is, running increasingly complex models on massive datasets while keeping costs manageable, latency low and performance high.

Solving the problem, Huang asserted, will require massive infrastructure investments, including as much as $1 trillion in datacenter spending over the coming years. "The future of software requires capital investment," he said. "This is a very big idea."

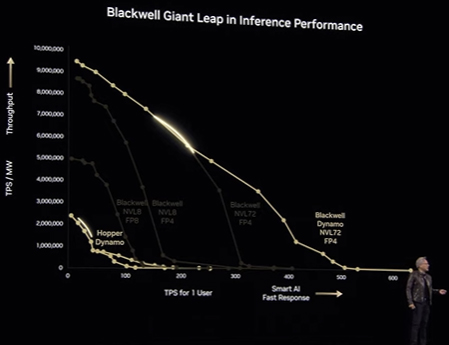

Nvidia's Blackwell platform promises to be up to the task of achieving inference at scale. First announced at last year's GTC event, the Blackwell processors are now "in full production," he said in the keynote. As one of his slides indicated, Blackwell represents a "giant leap in inference performance" over its predecessor, Hopper.

Huang also announced the open source Dynamo model accelerator, describing it as "the operating system of an AI factory." Combining Blackwell with Dynamo, he said, would achieve "ultimate Moore's Law."

AI Computing Powered by DGX

Huang agreed with what most industry watchers have identified as the biggest trend in enterprise computing: AI agents.

"A hundred percent of software engineers in the future...will be AI-assisted. I'm certain of that," he said, adding, "What enterprises run and how they run it will be fundamentally different."

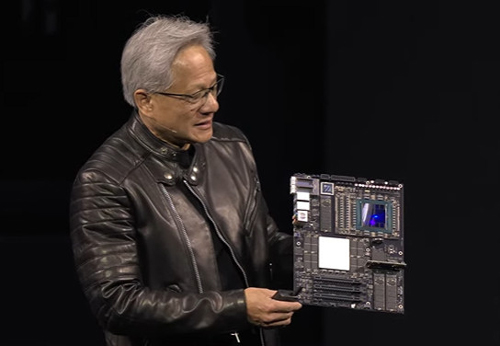

To accommodate the new capabilities and demands of AI agents, Nvidia is launching two new Blackwell-powered supercomputing platforms, the DGX Spark and DGX Station. As Redmondmag.com's Chris Paoli wrote in his GTC coverage:

These systems provide AI developers, researchers and data scientists with powerful tools to prototype, fine-tune and deploy large-scale AI models from their desktops.

Available from all Nvidia OEM partners, DGX is the "computer for the age of AI," Huang said. "This is what computers should look like."

What's Next

With Blackwell now in the market, Huang outlined what's next on Nvidia's GPU roadmap.

- A Blackwell upgrade named Blackwell Ultra NVL72 is scheduled for the second half of this year.

- A brand-new platform, Vera Rubin NVL144, will arrive in the second half of 2026.

- Its upgrade, the Rubin Ultra NVL576, is in development for the second half of 2027.

- And in 2028, Nvidia will launch its new Feynman-branded GPUs.