News

Next-Gen Small Language 'Phi' Model from Microsoft Released

Microsoft is promising high performance in a small package with this week's release of Phi-3, the latest version of its small language model (SLM).

The new Phi-3 models "are the most capable and cost-effective small language models (SLMs) available," according to Misha Bilenko, chief of Microsoft Generative AI, in a blog post Tuesday.

The Phi-3 models are designed to be compact enough to work in edge devices, whether they're Internet-connected or not. This opens new avenues in generative AI use cases, Microsoft argues.

"What we're going to start to see is not a shift from large to small, but a shift from a singular category of models to a portfolio of models where customers get the ability to make a decision on what is the best model for their scenario," said Sonali Yadav, principal product manager for Generative AI at Microsoft, in a separate post.

The first model in the Phi-3 family is the Phi-3-mini, a 3.8 billion language model that will be available in length variants of 4K and 128K tokens. Users can choose the variant that best suits their application requirements and compute needs.

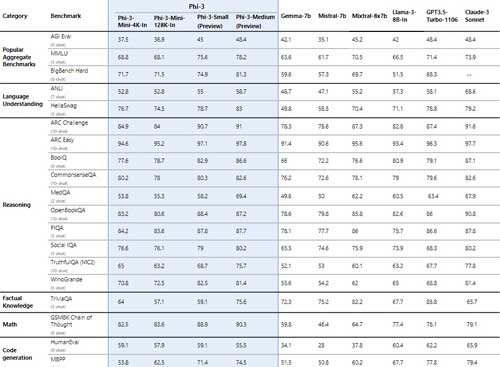

In the company's own head-to-head comparison tests, it found that Phi-3-mini outperforms models twice its size.

[Click on image for larger view.]

Figure 1. Results of benchmark tests by Phi-3 and competitors. (Source: Microsoft)

[Click on image for larger view.]

Figure 1. Results of benchmark tests by Phi-3 and competitors. (Source: Microsoft)

Phi-3-mini is designed with instruction tuning, which enables the model to interpret and execute a variety of instructions that mirror natural human communication. Microsoft said this will enhance the user experience by making the model highly intuitive and ready to implement right out of the box. By aligning closely with human instructive patterns, Phi-3-mini facilitates a smoother interaction between humans and machines for more natural conversations.

To accommodate a wide range of deployment environments, Phi-3-mini is integrated with a handful of Microsoft services and products. It is available through Azure AI and can also be run locally on developers' laptops via Ollama.

Additionally, the model has been optimized for ONNX Runtime and supports Windows DirectML, providing cross-platform compatibility across GPUs, CPUs and mobile devices. Moreover, Phi-3-mini can be deployed as an NVIDIA NIM microservice, featuring a standardized API interface that is optimized for NVIDIA GPUs.

Microsoft plans to release two other Phi-3 models -- the Phi-3-small (7 billion language model) and Phi-3-medium (14 billion language model) -- in the coming weeks. These larger micro models are said to outperform OpenAI's GPT 3.5 Turbo at much smaller sizes.

As with all of Microsoft's language models, the company said it has prioritized safety and privacy in the development of the Phi-3 family. "Phi-3 models underwent rigorous safety measurement and evaluation, red-teaming, sensitive use review and adherence to security guidance to help ensure that these models are responsibly developed, tested, and deployed in alignment with Microsoft's standards and best practices," wrote Bilenko.