News

Anthropic's Claude 3 Models Land on AWS Bedrock

UPDATE, 4/16: Claude 3 Opus is now available on Bedrock.

Amazon Web Services' $4 billion investment in generative AI firm Anthropic is paying dividends.

Last week, the cloud giant announced that it is offering two of Anthropic's Claude 3 AI models -- specifically, the Sonnet and Haiku models -- on its Bedrock platform, with Claude 3 Opus slated for availability in the coming weeks.

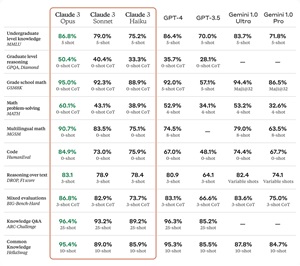

Anthropic debuted Claude 3 earlier this month. Opus, the most advanced of the three models, reportedly "outperforms its peers," according to Anthropic's tests.

[Click on image for larger view.] AI Performance Comparison (source: Anthropic).

[Click on image for larger view.] AI Performance Comparison (source: Anthropic).

Amazon Bedrock is the first and currently only managed service to offer the Claude 3 family of models. While Claude 3 Sonnet can be tried out in private preview on Google's Vertex AI service, cloud general availability in a managed service is exclusive to AWS -- which Amazon was quick to crow about.

"Data from Anthropic shows that Claude 3 Opus, the most intelligent of the model family, has set a new standard, outperforming other models available today -- including OpenAI's GPT-4 -- in the areas of reasoning, math, and coding," AWS said. "Claude 3 Sonnet offers the best combination of skills and speed, with twice the speed of earlier Claude models (for the vast majority of workloads) but higher levels of intelligence. While Claude 3 Haiku, designed to be the fastest, most compact model on the market, is one of the most affordable options available today for its intelligence category, with near-instant responsiveness that mimics human interactions."

A March 4 blog post explains the benefits of the Claude 3-backed service:

- Improved performance -- Claude 3 models are significantly faster for real-time interactions thanks to optimizations across hardware and software.

- Increased accuracy and reliability -- Through massive scaling as well as new self-supervision techniques, expected gains of 2x in accuracy for complex questions over long contexts mean AI that's even more helpful, safe, and honest.

- Simpler and secure customization -- Customization capabilities, like retrieval-augmented generation (RAG), simplify training models on proprietary data and building applications backed by diverse data sources, so customers get AI tuned for their unique needs. In addition, proprietary data is never exposed to the public internet, never leaves the AWS network, is securely transferred through VPC, and is encrypted in transit and at rest.

AWS noted that all three new models have advanced vision capabilities, which lets them process diverse data formats and analyze image data, serving to meet customers' growing demand for models that better understand visual assets including charts, graphs, technical diagrams, photos and so on.

The company also announced a furtherance of its Anthropic partnership.

"As part of the news, Anthropic said it will use AWS Trainium and Inferentia chips to build, train, and deploy its future foundation models -- benefitting from the price, performance, scale, and security of AWS, with the two companies collaborating on the development of future Trainium and Inferentia technology," the cloud giant said. "It was also announced that AWS will become Anthropic's primary cloud provider for mission critical workloads, including safety research and future foundation model development, and that Amazon would invest up to $4 billion in Anthropic."

About the Author

David Ramel is an editor and writer at Converge 360.