AI Castaway

Citation Needed: Putting 7 AI Research Tools to the Test

AI gets a bad rap for being a fount of misinformation (and for good reason). But what about all the ways it can be used to find the truth?

- By Ginger Grant

- 03/26/2024

There is a lot of discussion around using AI tools to help people with scholastic assignments and academic papers. I have also read a number of stories about students using AI tools (and the inevitable issues they have with their grades when they're caught by instructors).

AI tools do not have to be used for nefarious purposes. You can use AI to help you research a subject and get references to studies that you might not find in standard search engines. If you are currently in school or researching a topic where you need to cite studies in a particular subject area, you may want to use AI to help you.

One thing to remember when researching any topic with AI is to check the underlying source references provided. The key is to provide good prompts to get the answers you need -- and to make sure those answers are not made up.

To see how well and how accurately today's generative AI tools can surface data for students and researchers, I put seven of them to the test: ChatGPT 4, Scite, Consensus, Elicit, Litmaps, Perplexity and SciSpace. In each case, I asked it specifically to "Provide research and statistics for employee retention."

OpenAI's ChatGPT 4

Is there anything that ChatGPT cannot do? It's worth noting, though, that I used the paid version of ChatGPT for this column, because the free version has a lot of limitations. Also, a lot of the other tools mentioned here use OpenAI's model to train their data sources.

When I prompted ChatGPT 4 about employee retention data, it returned a list of summary information about the topic, with links to the sources it used to create the summary. It told me that it was referencing a "comprehensive overview" from 2024, which was nice -- it was the only tool that focused on current research by default.

ChatGPT 4 will designate its sources using the symbol [''] [''] and will provide a link to the source. When I tried it, the source documents were often listed multiple times, so while it looks like you have a lot of sources, you may in fact just have a lot of duplicates. For example, in the summary I generated with ChatGPT 4, there were 13 references but they only referenced five different articles.

None of the articles was from a source I was familiar with, and none referenced any studies. When I modified my prompt to "Provide research from scholarly sources on employee retention," I got information from Frontiers in Psychology and MDPI.com.

In the end, ChatGPT 4 returned the fewest number of articles of any of the tools I tested, and the best summaries of the documents it referenced.

Scite.ai

Scite has a number of great resources to help you enter the right prompt to get detailed information from scientific publications and understand how they cite one another. This way, you can get to the primary source or the original study that's referenced in other publications. You can also upload your PDFs for Scite to analyze.

Scite uses ChatGPT to research articles. It also has a section to help students write papers, though there is a charge for that feature -- $20 a month if you don't cancel it. I used the seven-day free trial, which nevertheless required me to create an account and enter a credit card.

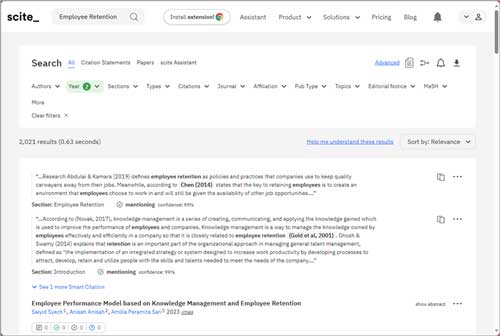

Scite includes summaries of existing scholarly works so you can save time by reading the important parts instead of poring over long papers and articles, which is a common theme with these tools. It has a lot of different filters to help you narrow down the returned results and -- as shown in the screenshot below -- provides a "Help me understand these results" link for each query so you actually make sense of what you're looking at. It also includes detailed documents and videos to assist you in learning the tool's capabilities.

[Click on image for larger view.]

Figure 1. Scite provides a "Help me understand these results" link for each query so you actually make sense of what you're looking at.

[Click on image for larger view.]

Figure 1. Scite provides a "Help me understand these results" link for each query so you actually make sense of what you're looking at.

Because Scite references scholarly journals, you may not have access to all of the source documents unless you purchase access to the papers. Helpfully, Scite provides so many results that you can sift through them to find one that is free.

Consensus.app

If you are looking for a "go-to source in the search for expert knowledge" and are OK with your answers not always being correct, you might be interested in Consensus.

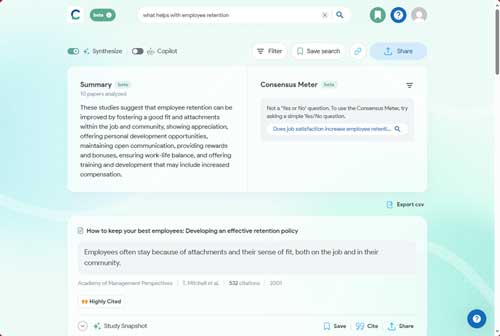

Consensus requires you to create an account to use it. It promises to anonymize the search results and not share your data. It is also in beta, so hopefully this will not change whenever it becomes generally available. There's a "Synthesize" option to generate summaries, as well as a "Copilot" option to "get answers, generate content, and more."

It also warns you that the answers it gives may not be correct. As I am always interested in the source material and not just the summaries, I did check the returned results. I did not find anything that was not referencing an actual paper but, after reading Consensus' disclaimer about accuracy, I would not skip validating the information.

[Click on image for larger view.]

Figure 2. Consensus lets you toggle between a "Synthesize" option to generate summaries and a "Copilot" option to "get answers, generate content, and more."

[Click on image for larger view.]

Figure 2. Consensus lets you toggle between a "Synthesize" option to generate summaries and a "Copilot" option to "get answers, generate content, and more."

The free Consensus license has limited uses, and each use will eat up some AI credits. If you want to use this particular tool a lot, you may need to get a paid account. It did provide me access to several papers that I could reference for free.

Elicit.com

In a database of 200 million research papers, one ought to be able to find something applicable to one's research.

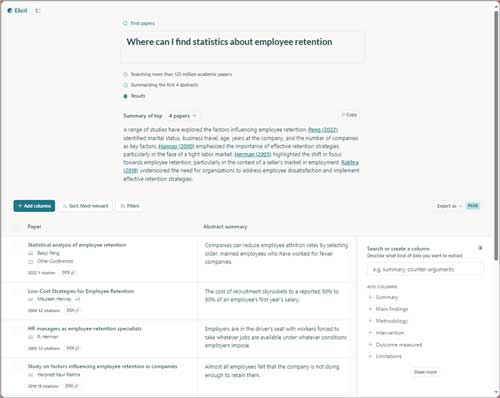

Elicit has a free version with 5,000 free credits, and a $10-a-month version with 12,000 credits per month. What can you do with a credit? According to Elicit's Web site, "Elicit uses a credit system to pay for the costs of running our app. When you run workflows and add columns to tables it will cost you credits." It also states, "A good rule of thumb is to assume that around 90% of the information you see in Elicit is accurate." I signed up for the free version.

[Click on image for larger view.]

Figure 3. Most of the results generated by Elicit may require additional money to download or read.

[Click on image for larger view.]

Figure 3. Most of the results generated by Elicit may require additional money to download or read.

Once again, I asked about finding information on employee retention, and I validated the resulting references to determine that they were real. The issue with Elicit, though, is most of the articles referenced required additional money to download or read, so keep that in mind when determining if this site will be helpful to you.

Litmaps.com

If you want to look at the relationship between what you asked for and the relevant criteria (i.e., a visual representation), check out Litmaps. It states it has millions of published scientific articles that it will review based on your prompt (and as long as you sign up for a free account).

Litmaps works best for finding articles related to another article. I provided it with the name of an article I found in a previous search, and Litmaps found that article and others related to it. The map it generated, shown below, was very interesting. In looking at the map, I agree with the positioning of results that I didn't find relevant. For example, there's an article on emotional intelligence at the bottom of the map, which is appropriate as it did not seem to be a good fit with my original prompt.

[Click on image for larger view.]

Figure 4. Litmaps works best for finding articles related to another article.

[Click on image for larger view.]

Figure 4. Litmaps works best for finding articles related to another article.

If you hover over a dot, Litmaps will highlight the associated article. None of the research cited was invented, and the map was a rather interesting visual addition.

Perplexity.com

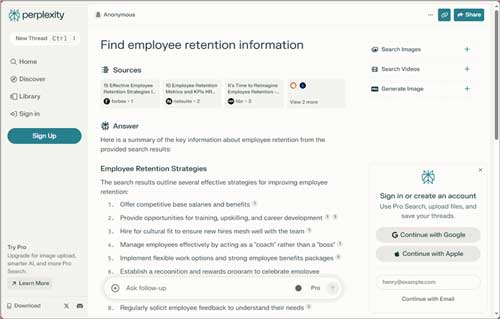

Perplexity is an easy-to-use Web site that allows you to try it out without having to sign up. For each prompt, it provides you five sources with a lot of summary information, generating results based on OpenAI's GPT-4V and Anthropic's Claude 3.

In the screenshot below, you'll see the sources that it returned for my prompt about employee retention. You will notice that the sources were from popular media sites like Forbes and Harvard Business Review.

[Click on image for larger view.]

Figure 5. For each prompt, Perplexity provides you five sources with a lot of summary information.

[Click on image for larger view.]

Figure 5. For each prompt, Perplexity provides you five sources with a lot of summary information.

The information was superficial and not scholarly in nature. Perhaps you get what you pay for. If you create an account, there are more options, but they don't necessarily mean more in-depth results; I don't see any references on the Perplexity site to it having a database of scholarly articles -- unlike, for instance, Elicit.

SciSpace (Typeset.io)

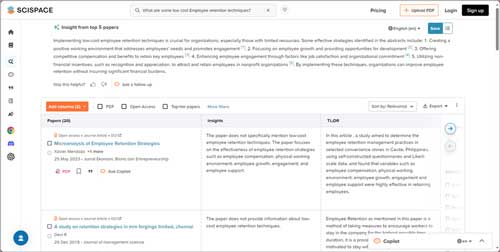

SciSpace, which you can try for free, is designed to help people better understand research papers. I like how it calls summaries "TL;DRs." Given how long some of these articles are, that is very appropriate.

In addition to finding papers for you, it can also change your writing style using its "Paraphraser" option, with styles including persuasive, diplomatic and passive-aggressive (I did not realize there was a demand for passive-aggressive writing). If SciSpace's built-in styles do not fit, you can enter your own style.

I picked "Yoda." I pasted in the first sentence of this section (before edits) and got this: "Help people better understand research papers, this site is designed to do. Try it for free, you can." I had so much fun with it that I ran out of free tries, of which you only get three.

The research returned by SciSpace is rather in-depth, which is why it takes a while to load. It is also based on ChatGPT.

[Click on image for larger view.]

Figure 6. SciSpace summaries, which it calls "TL;DRs," are designed to help people better understand research papers.

[Click on image for larger view.]

Figure 6. SciSpace summaries, which it calls "TL;DRs," are designed to help people better understand research papers.

Summary

All of the sites I tested here provided a lot of very informative and in-depth information that I could see being useful to include in a paper on employee retention. ChatGPT 4 did not return many results, which means I would likely use another tool. I thought the information from Perplexity was similar to what I might find using an Internet browser. However, Perplexity can analyze PDFs uploaded by users, which is an interesting feature. Litmaps has an interesting interface and did return a lot of different articles, but some were only tangentially related to the query. I am sure that makes for a better-looking map, but it did not help as much with my research.

Scite, Consensus, Elicit and SciSpace all provided a lot of great information. Because Scite gives you seven free days of a full-featured account, and more features and credits than the other sites, I thought it was the best research tool that I reviewed here. If I had accounts that provided all of the features, I might have picked Consensus, Elicit or SciSpace as they provided similar information, but they were more interested in not giving everything away than allowing use of all the features.

SciSpace was a close second. I liked the fact that it returned 20 results for my prompt and that it included other features like recreating your text using different writing styles.

The bottom line is that any one of these AI tools will help with researching any topic and really enables users to access an amazing amount of information easily. There is no doubt that the quality of research you can do in a short amount of time with these tools will be a game changer.

About the Author

Ginger Grant is a Data Platform MVP who provides consulting services in advanced analytic solutions, including machine learning, data warehousing, and Power BI. She is an author of articles, books, and at DesertIsleSQL.com and uses her MCT to provide data platform training in topics such as Azure Synapse Analytics, Python and Azure Machine Learning. You can find her on X/Twitter at @desertislesql.