In-Depth

Researchers Take AI Agents to the Next Level with the AutoGen Framework

Addressing the most likely next significant step in the evolution of AI agents are systems where multiple agents can interact with each other, and with humans.

- By Pure AI Editors

- 03/01/2024

An active open source project named AutoGen is taking AI agents, such as those based on the OpenAI GPT-4 large language model (LLM), to the next level by enabling the creation of systems with interacting agents. It's not an exaggeration to say that AI agents, such as ChatGPT and DALL-E, stunned the world when they were first released just over a year ago. The most likely next significant step in the evolution of AI agents are systems where multiple agents can interact with each other, and with humans.

The AutoGen Framework

AutoGen is a software framework which enables development of LLM system using multiple agents that can communicate and interact with each other to solve complex tasks. The somewhat unfortunate name "AutoGen" incorrectly suggests that the system automatically generates systems -- it does not. The name is historical, based on early versions that had a different set of goals.

AutoGen is a collaborative research effort from Microsoft, Penn State University and the University of Washington. This article summarizes some of the information on the rather intimidating AutoGen web site.

AI agents created by AutoGen are customizable and allow integration with human participation. The AutoGen agents can operate in various modes, with combinations of LLMs, human inputs and software tools.

AutoGen enables building next-generation LLM applications using multiple agents with only moderate effort, at least compared to creating multi-agent systems from scratch. AutoGen greatly simplifies the coordination and optimization of complex LLM workflows. It is designed to maximize the performance of the underlying LLM models, and overcome some of their weaknesses.

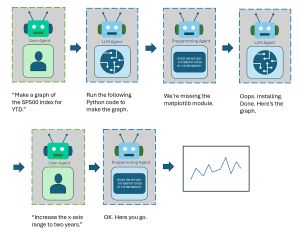

[Click on image for larger view.] Figure 1: AutoGen Multi-Agent Session

[Click on image for larger view.] Figure 1: AutoGen Multi-Agent Session

A good way to gain an understanding of what AutoGen can do is to examine the diagram in Figure 1. The diagram illustrates a hypothetical session involving an AutoGen User Agent (most often a human, but possibly an AI application like ChatgPT), an AutoGen standard conversational AI Agent and an AutoGen Programming Agent.

The human User Agent initiates the interaction by requesting a graph of the SP 500 index. The AI Agent responds with basic Python code to execute. The Programming Agent informs the other two agents that a prerequisite code library module is missing. After the requested graph has been created, the User Agent requests a modification, and the Programming Agent makes the change and produces the final graph.

Teachable Agents

A critical limitation of the first generation of AI agents and applications such as ChatGPT is that they have no long-term memory. Suppose you have a conversation with an AI agent about restaurant recommendations, and you give the agent information such as your preference for Italian food. You eventually terminate the conversation, but your next session starts from scratch and loses all the information you gave to the agent.

There are relatively simple ways to add crude long-term memory to an AI system. Briefly, the text of conversations can be saved to a traditional database and associated with a user. At the start of a new session, the database is loaded into the system. Any query/prompt to the AI agent searches the user database first and relevant information can be simply added to the query/prompt.

[Click on image for larger view.] Figure 2: AutoGen Teachable Agents

[Click on image for larger view.] Figure 2: AutoGen Teachable Agents

The AutoGen framework has an optional module that enables users to go beyond simple memory, by allowing agents to learn new skills. See the blog post "AutoGen's Teachable Agents." The mechanism is illustrated in Figure 2.

Teachability persists user interactions across session boundaries in long-term memory implemented as a special, sophisticated vector database. Instead of copying all of memory into the query/prompt, individual memories (called memos) are retrieved into context as needed. This allows the user to teach frequently used facts and skills to the teachable agent just once, and have it recall them in later sessions.

Wrapping Up

The Pure AI editors spoke about AutoGen with Dr. James McCaffrey from Microsoft Research. McCaffrey commented, "AutoGen and similar efforts such as ChatGPT's Manage Memory feature are clearly going to take AI systems to a new level of sophistication and capability. It's not difficult to imagine multi-agent systems evolving to a point where they begin learning without any human interaction at all."

Ricky Loynd, the primary architect of the AutoGen Teachable Agents module, observed, "AutoGen is primarily a research framework, but the gap between research code and productization code is shrinking." He echoed McCaffrey's opinion by noting his current effort is to "enable learning from the agent's own experience, to reduce dependence on explicit user teachings."