In-Depth

Researchers Identify Chess Players from Their Game Moves

- By Pure AI Editors

- 03/03/2022

Machine learning researchers have demonstrated that it's possible to accurately predict the identity of a chess player based on the sequence of positions from their chess games. The technique used in the research has potential broad application for identifying persons based on a wide range of behaviors.

The research is presented in the paper "Detecting Individual Decision-Making Style: Exploring Behavioral Stylometry in Chess" by R. McIlroy, R. Wang, S. Sen, J. Kleinberg and A. Anderson. The source chess game data was harvested from the popular open source chess web site lichess.org, which has stored millions of chess games. The Lichess games range from those played by beginners through elite chess grandmasters such as Magnus Carlson (Norway, current world champion), Ian Nepomniachtchi (Russia, challenger 2021) and Wesley So (current U.S. champion).

How the Chess Player Identification System Works

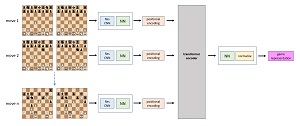

The diagram in Figure 1 illustrates how the chess player identity prediction works. The input into the prediction system is a sequence of chess moves. The output of the system is a vector of numeric values that's an abstract representation of the input game. The numeric representation is an encoding of the input chess game that takes the sequence of moves into account. This is important because a specific chess position can arise from different move orders.

[Click on image for larger view.] Figure 1: The Image-Transformer Chess Player Identification System

[Click on image for larger view.] Figure 1: The Image-Transformer Chess Player Identification System

The chess move sequence information is captured by a transformer encoder module. Before the development of transformer modules in 2017, sequence information was usually captured by recurrent neural modules such as LSTMs (long short-term memory) and GRUs (gated recurrent unit). LSTMs and GRUs have a small internal memory that remembers information about previous inputs in the sequence. For example, an LSTM or GRU can be used for sentence sentiment analysis. Consider the sentences "The movie was a great waste of time" and "The movie was a great way to waste some time." Although the two sentences have nearly the same words, the word ordering in the sentences changes the meaning from negative sentiment to positive sentiment.

In a similar way, predicting the identity of a chess player depends upon the order that game positions are reached as well as the positions themselves. This is especially true in the early part of a chess game where players have personal move order preferences.

Recurrent neural modules such as LSTMs and GRUs often work well, but transformer architecture modules have revolutionized natural language processing problems that have long sequences of inputs. LSTM and GRU modules analyze a sequence of input values, such as words in a sentence, one at a time, from left to right. A minor problem with this approach is that all words are treated equally.

The key to transformer architecture modules is that they simultaneously accept all input items in a sequence, and then weight different items using a mechanism called neural attention. This is somewhat similar to the way a speaker can change the meaning of a sentence by emphasizing different words. For example, consider the sentence, "John is driving to Denver." If you speak the sentence, a strong emphasis on any of the five words changes the meaning. "John is DRIVING to Denver" suggests that there are more likely alternatives, such as flying or taking a train. But "JOHN is driving to Denver" suggests alternative drivers.

Images as Inputs

An unusual aspect of the chess player identification system is that the input is treated as a sequence of 8x8 images. Chess positions can also be represented as text or numeric information. For example, using Forsyth-Edwards Notation (FEN), the chess position after opening moves of the Sicilian Defense of 1.e4 c5 2.Nf3 is:

rnbqkbnr/pp1ppppp/8/2p5/4P3/5N2/PPPP1PPP/RNBQKB1R

Lowercase letters represent black pieces, uppercase represent white pieces. Numbers are consecutive empty squares on a rank (left-to-right row).

But representing a chess position as a string of text loses some explicit information about the position's geometry. For example, with FEN notation, it's not obvious which pieces are located on the same file (top-to-bottom column). By representing a chess position as an 8x8 image, it's easier for a machine learning model to make use of board geometry

The image-transformer identification system uses convolution neural networks (CNNs) with residual blocks. CNNs are specialized neural network architectures that are specifically designed to work with images. Residual blocks are an internal architecture that prevents model training failure due to the vanishing gradient problem.

Experimental Results

The "Detecting Individual Decision-Making Style" research paper presents dozens of results and sub-results. The key takeaway is that from a group of approximately 2,500 chess players, using just 100 games per player for training, the identification system correctly identified a player with 98 percent accuracy. A random guess would be correct only about 0.04 percent of the time. Previous research results achieved 93 percent accuracy so the image-transformer architecture is a significant improvement.

The 98 percent accuracy of the image-transformer system is impressive, but this result uses game positions starting from the opening position. The first 15 moves of a chess game are a strong predictor of chess player identity because most chess players have a strong preference for certain openings. For example, former world champion Bobby Fischer almost always played 1.e4 with the white pieces, and the Sicilian Defense (1.e4 c5) or King's Indian Defense (1.d4 Nf3) with the black pieces.

When the first 15 moves of chess games are hidden, the image-transformer chess player identification system still achieved an impressive 86 percent accuracy. This is much better than the approximately 27 percent accuracy achieved by previous research results.

Wrap Up

Because the image-transformer chess player identification system treats input items as images, it's quite possible that the architecture can be extended to scenarios such as predicting the identity of a person from video of their walking patterns or hand motions while talking. This raises privacy and security issues, especially with the increasing use of video cameras in public places.

The "Detecting Individual Decision-Making Style" research paper is the most recent in a long line of research papers related to computer science and chess. Alan Turing speculated about the possibility of a computer chess program as early as 1945.

According to most references, the first computer program that actually played chess was written in 1957 by Alex Bernstein at MIT for an IBM 704 machine. The program wasn’t very good, but it did play legal moves. Work on computer chess continued though the 1960s. In 1970, a tournament with six leading chess programs was organized by the Association for Computing Machinery. The winning program was named Chess 3.0 running on a CDC mini-computer.

In the 1980s and 1990s, computer chess programs slowly but surely improved. And then the AlphaZero program came in 2017. In just 24 hours of deep reinforcement learning training, AlphaZero achieved a superhuman level of play. And a few weeks later, AlphaZero defeated the reigning champion computer program "Stockfish." Stockfish was the culmination of 65 years of work by some of the best minds in history. In a 100-game match, AlphaZero obliterated Stockfish 28-0 with 72 draws.

The Pure AI editors asked Dr. James McCaffrey for a opinion on the image-transformer chess player identification system. McCaffrey is a machine learning scientist engineer at Microsoft Research and is an expert-rated chess player. McCaffrey noted, "The chess player identification system is very impressive." He added, "There is increasing successful use of transformer-based neural systems for a wide range of problems beyond natural language processing."