How-To

Researchers Use Machine Learning Techniques to Detect Compromised Network Accounts

Researchers and engineers at Microsoft have developed a successful system that detects compromised enterprise network user accounts.

- By Pure AI Editors

- 07/07/2021

Researchers and engineers at Microsoft have developed a successful system that detects compromised enterprise network user accounts. The effort is named Project Qidemon. The core of Qidemon is a deep neural autoencoder architecture. In one experiment with real life data, Qidemon examined 20,000 user accounts and successfully found seven of 13 known compromised accounts.

The Problem Scenario

One way to think about enterprise network security is to consider internal vs. external threats. Internal threats, in the form of malicious users with legitimate access to network resources, are particularly dangerous. In many scenarios, network users have broad permissions to view and modify resources. A single instance of a malicious network internal event can have catastrophic consequences for a company.

A deep neural autoencoder accepts input, such as network user login activity, and learns a model that predicts its input. The difference between actual input and computed output is called reconstruction error. The fundamental idea is that inputs that have high reconstruction error are anomalous, and therefore accounts associated with those inputs warrant close inspection. Internally, an autoencoder creates a compressed representation of its source data.

For example, suppose an input to a deep neural autoencoder is (time = 21:30:00, recent failed attempts = 1, IP address ID = 73027). A prediction output such as (time = 21:27:35, recent failed attempts = 1.5, IP address ID = 73027) would have low reconstruction error, but a prediction output such as (time = 06:25:00, recent failed attempts = 3.5, IP address ID = 99999) would have high reconstruction error.

Most of the first network account anomaly detection systems, dating from the 1980s, were rule-based. Such a system might have handcrafted rules such as "IF recent_failed_attempts > 5 AND time BETWEEN (03:00:00, 0:30:00) THEN risk = 8.33". Rule-based systems can be effective but they require constant manual updates, and they can be easily defeated by a knowledgeable adversary.

The first deep neural anomaly detection systems, dating from the early 2000s, used a supervised approach with a large set of training data. The training data consists of a set of many thousands of items where each item has a set of input values and a manually supplied class label where 0 = normal activity, 1 = anomalous activity. A neural model is created using the training data -- essentially the anomaly detection model learns rules based on the training data. Supervised systems can also be very effective but they require huge amounts of training data, which is usually expensive to create. Additionally, supervised neural systems often cannot deal well with inputs related to new, previously unseen compromised account activity.

Deep neural autoencoders are unsupervised systems, meaning they don't need manually labeled training data. Deep neural autoencoders for anomaly detection have been used with moderate success since about 2010. However, a simplistic autoencoder architecture often suffers from a very high false positive rate -- too many legitimate network account activities are flagged as potentially anomalous. The Project Qidemon team created a sophisticated, but still practical, autoencoder architecture that balances the need to correctly identify compromised accounts with the need to minimize false positive alarms.

Effectiveness of the Qidemon System

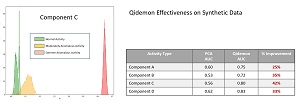

Project Qidemon evaluated the effectiveness of its compromised user account detection system by looking at both synthetic data and real data. A set of synthetic data was created with three types of input data: normal, moderately anomalous, and extremely anomalous. Representative results are shown in Figure 1.

[Click on image for larger view.] Figure 1: The Qidemon system is effective on synthetic data

[Click on image for larger view.] Figure 1: The Qidemon system is effective on synthetic data

The graph on the left side of the figure shows that the Qidemon system clearly identifies all the extremely anomalous synthetic data items. More importantly, the system does an excellent job of distinguishing between normal activities and moderately anomalous activities.

The Qidemon system was compared to a system that used Principal Component Analysis (PCA). PCA is a classical statistics technique that is somewhat similar to a deep neural autoencoder in the sense that both models create a compressed representation of their source data. The compressed representation can be used to reconstruct the source data and so a reconstruction error metric can be calculated.

The table on the right side of Figure 1 shows that the Qidemon anomaly detection system significantly outperforms the PCA-based system. The primary evaluation metric used to compare the two systems is AUC (area under curve) of the ROC (receiver operating characteristic) graph. Briefly, ROC AUC is a measure of prediction accuracy that does not take negative instances (normal user activity) into account. The idea here is that in anomaly detection systems, the vast majority of activity is normal. If you include how well normal activity is predicted, your system will be correct most of the time and you'll get an overly optimistic estimate of the effectiveness of your system.

The Qidemon System Applied to Real World Data

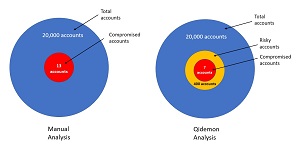

The Qidemon system was applied to real world data. Key results are shown in Figure 3. An enterprise network with 20,000 user accounts had been manually analyzed and was found to have 13 compromised accounts (0.065 percent). The Qidemon system analyzed the account data and initially identified 400 suspicious accounts. These user accounts were analyzed further and found to contain seven of the compromised accounts.

[Click on image for larger view.] Figure 3: The Qidemon system is effective on real-world data

[Click on image for larger view.] Figure 3: The Qidemon system is effective on real-world data

Results like these represent state of the art for unsupervised anomaly detection. If manually examining a network account takes 5 minutes per account, manually examining 20,000 accounts would take 100,000 minutes = 1,667 hours = one person working 40 hours per week for 42 weeks. The Qidemon system reduced the manual labor required to examine the accounts by approximately 95 percent.

Remote Collaboration During the Pandemic

The Qidemon team includes J. Zukerman, Y. Bokobza, I. Argoety from the Microsoft Israel Development Center and P. Godefroid and J. McCaffrey from the Microsoft Redmond Research Lab. The project was part of the internal Microsoft AI School program that develops advanced machine learning techniques for products and services.

The project was entirely developed during the covid pandemic. Lead engineer Zukerman commented, "Working via Teams made me come to the meeting super prepared and focused on getting the most value out of each meeting."

Lead project manager Bokobza added that remote collaboration was effective, however, there was some "difficulty to present the project to a larger audience because of the online meeting fatigue factor."

McCaffrey noted that, "Deep neural systems have had amazing successes in areas such as image recognition, speech and natural language processing, but progress in security systems has been slower. In my opinion, this project represents a significant step forward."

Zukerman and Bokobza speculated, "There are some fascinating technical paths Qidemon can take." For example, "Applying a generative model (VAE) to correlate the generated anomalies with unseen attacks."