AI Test Lab

First Look: OpenAI's New o1 Models

OpenAI’s new family of o1 models use "chain of thought" reasoning to excel in complex tasks like math, coding and science. Though still in preview, o1 represents a significant advancement in LLMs, particularly for reasoning and future breakthroughs.

OpenAI recently released preview versions of a new family of large language models (LLMs) named "o1." These models offer breakthroughs in "advanced reasoning capabilities" in part because they "spend more time thinking before they respond," the company explained in its announcement of the models.

So how different are these models from what's already out there? And what do they mean for the practical application of AI tools in your organization, both now and down the road? Read on for what's new in o1, what's to come and why it all actually matters.

What Are the o1 Models, and How Are They Different?

To understand the breakthroughs that OpenAI is talking about, we first need to cover how traditional LLMs work.

In general, LLMs function as highly capable "next-word predictors." They receive a request, then, based on all the millions and millions of text samples they've been trained on, they predict the likely first word of what would make a good response, followed by the next word, then the next word, then the next, and so on; they build their answers word by word. (BTW: To the LLM, they're not technically words, but "tokens," which are chunks of characters. This will become important later.)

The overall output quality of any LLM is largely based on the data it was trained on; however, adjustments to the output can be done with settings like "temperature" (you can play with this yourself in OpenAI's Playground mode) and post-processing refinements like filters and even fact-checking (which is why you don't see as many hallucinations now as when these models first appeared).

That said, most LLMs still struggle with requests related to reasoning, deduction, spatial understanding, logic, higher-level mathematics or anything requiring it to follow complex steps. It's challenging for LLM creators to make improvements in these areas through traditional training or additional post-processing.

Even things that seem very simple to humans can be overly complex or even impossible for LLMs to accomplish. See, for example, the "two rs in strawberry" example that recently went viral. This was likely the result of LLMs being unable to read letters like people do. Instead, they process text as those aforementioned tokens, which are coded character sets that are usually portions of a word or output. This error has since been fixed in most models, likely via post-processing.

However, here's an example of a problem that, as of this writing, will still generate incorrect results from most LLMs, if not all of them:

Assume the laws of physics on earth. A grape is put in a small bowl and that small bowl is placed upside down on a table. The bowl is then placed in the fridge. Where is the grape? Explain your answer step-by-step.

Humans can tell that the grape stays on the table when the bowl is moved to the fridge. But go ahead and try it yourself on any model and see what it answers. Note that I actually took this example from a video OpenAI released to show off o1's reasoning capabilities, although I changed the strawberry to a grape and the cup to a bowl.

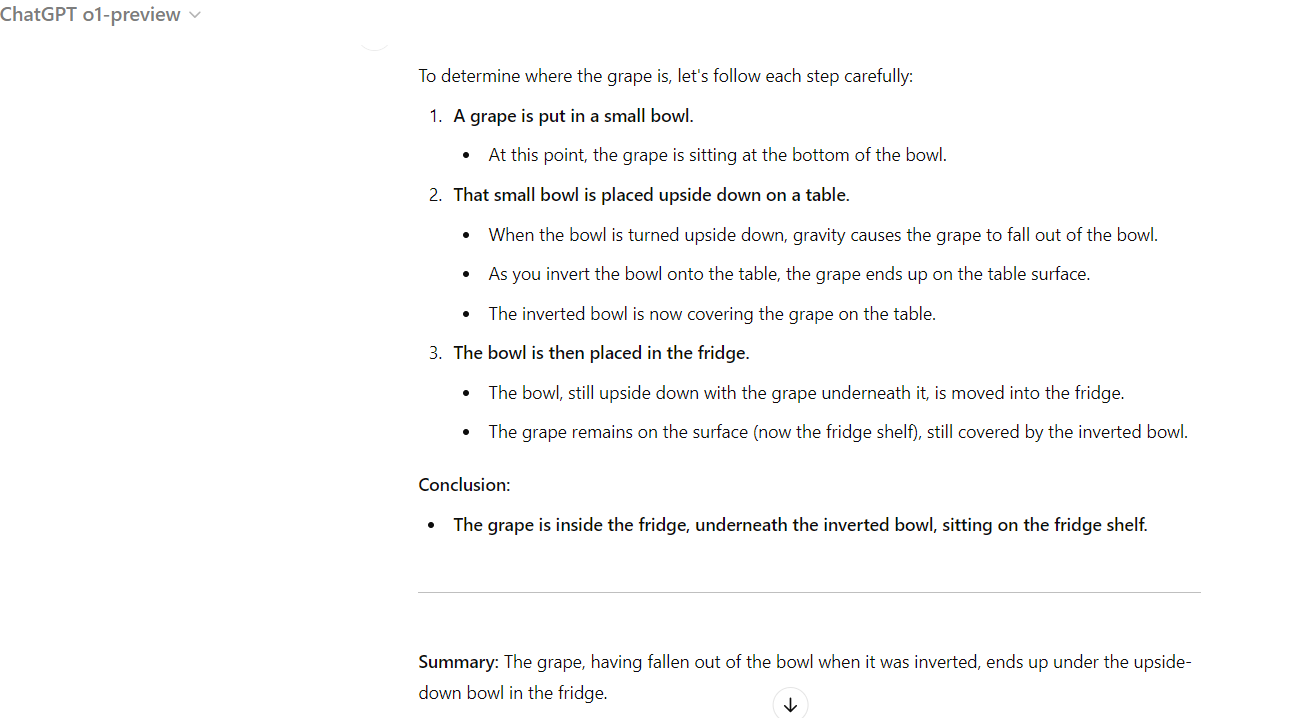

Unfortunately, when I tested it, even the o1-preview model got it wrong. See below:

Even o1 is stumped by the "upside-down container being moved" question, showing how difficult spatial problems and complex reasoning are for all LLMs.

Even o1 is stumped by the "upside-down container being moved" question, showing how difficult spatial problems and complex reasoning are for all LLMs.

The fact that the new model (importantly, still in "preview" mode) that's designed for logical and spatial reasoning struggles with this problem shows how difficult these are to solve. And, as you can imagine, there are many types of problems that need complex reasoning skills; in the practical realm, these often relate to math, science and code.

To solve these and other challenging queries, OpenAI has turned to "chain of thought" reasoning, an approach discussed in this 2022 research article. With "chain of thought" or "chain reasoning," model engineers don't try to improve a model's output by refining its training data, adjusting its settings or adding more post-processing (although all of those things were definitely done to o1). Instead, they attempt to improve the output by adding additional "thinking" steps (and therefore, time) to the processing of the request itself. As Django co-founder Simon Willison put it in his post on the topic, you can think of chain-of-thought reasoning as engineers instructing the LLM to "think step by step" with every query. By following that additional instruction, the system is better able to handle complex tasks.

OpenAI turned to "chain of thought" reasoning for the o1 models. With "chain of thought," model engineers don't try to improve a model's output by refining its training data, adjusting its settings or adding more post-processing. Instead, they attempt to improve the output by adding additional "thinking" steps (and therefore, time) to the processing of the request itself.

When you put a query to o1 you'll see this "thinking" in action. As it processes, it takes its time and will indicate the stages of "thought" it goes through; it will occasionally respond with something like, "breaking down the request," "taking a closer look" and "revisiting the problem."

In most cases the results are truly a leap ahead. Here you can view benchmark tests that show the improvements OpenAI has seen with this approach to handling complex problems related to reasoning, math, chemistry, physics and code. And remember, o1 is still in preview right now, so there's more to come.

Here are some examples of how OpenAI expects these o1 models to be used in the real world (featuring direct links to OpenAI's videos with experts explaining these use cases):

Big Improvements, But Not for Every Use Case (Yet)

Not only did OpenAI use benchmarks to evaluate its o1 output, it also conducted "blind taste tests" by showing users anonymized output from both the o1 and 4o models side-by-side, and then asking users to vote for the output they preferred. Here's what OpenAI found: "o1-preview is preferred to gpt-4o by a large margin in reasoning-heavy categories like data analysis, coding, and math. However, o1-preview is not preferred on some natural language tasks, suggesting that it is not well-suited for all use cases."

The chart below shows which model was preferred for the following text- and science-based tasks:

You can see that o1 is pretty close to 4o for many text-based inquiries; for personal writing, 4o was only slightly preferred by users, while the two models were basically tied when it came to editing text. However, right now o1 executes the "chain reasoning" process with every query, even when it's not required for a problem, meaning similar queries will take you longer using o1. Also, o1-preview currently doesn't have the built-in capabilities 4o does, like image and GPT creation, Web browsing and advanced data analytics. OpenAI said it is working on integrating these into o1 but it will take some time. In addition, o1-preview is available only to paid users, and its use is limited (more on usage limits below). So right now, unless your specific use case is related to reasoning, math, code, logic or science, you may want to continue using 4o for your day-to-day queries.

Your o1 Model Choices (Coders, Take Note!)

Currently, there are two versions of o1: o1-preview and o1-mini. According to the announcement of the o1 family, the mini version, with its faster processing, was created specifically with developers in mind:

To offer a more efficient solution for developers, we're also releasing OpenAI o1-mini, a faster, cheaper reasoning model that is particularly effective at coding. As a smaller model, o1-mini is 80% cheaper than o1-preview, making it a powerful, cost-effective model for applications that require reasoning but not broad world knowledge.

The company further explains the "lack of broad-world knowledge" and its impact on that model elsewhere on its Web site:

Due to its specialization on STEM reasoning capabilities, o1-mini's factual knowledge on non-STEM topics such as dates, biographies, and trivia is comparable to small LLMs such as GPT-4o mini. We will improve these limitations in future versions, as well as experiment with extending the model to other modalities and specialities outside of STEM.

So unless your use case relates to code or is STEM-specific, o1-mini may not be for you (at least not yet).

As mentioned above, the o1 models currently have usage limits within the ChatGPT interface. Currently, you must have a paid-level subscription for both, and while o1-mini is limited to 50 queries per day, o1-preview is limited to 50 per week. OpenAI said it eventually plans to make o1-mini available in its free tier so everyone can access it, but it hasn't given a specific date for when this will happen.

You may be wondering about the "80% cheaper" comment above if both models are accessed via ChatGPT for one price. OpenAI is referencing the pricing for the developer versions of the models, which are accessed via APIs and then pulled into various applications. For this developer access, most LLMs charge by input/output token usage, usually on a per-million basis.

Here's a quick look at the current pricing for OpenAI's main models:

As you can see, the o1 models are currently much more expensive than any of OpenAI's other models. Remember that the processing power used by the o1 models is significant; longer processing time means more power on the back end. And that time is expensive. Note that for the next version of o1-preview, OpenA is working on not making the model go through all that processing when it doesn't need to happen, thus saving both time and processing power.

Also note that even though o1's processing takes place in seconds, this post on X by an OpenAI researcher puts into perspective the breakthrough that o1 represents when that time is expanded into hours, days or even weeks:

And that's really what the o1 family represents: It's not necessarily about what it can do today (though it's extremely impressive), but about LLMs taking a giant leap forward, becoming capable of more than day-to-day tasks and developing products and breakthroughs on a much bigger scale -- and, most notably, making that power available to the masses.

Final Verdict

There's no doubt that these o1 preview versions are a huge leap forward for LLMs, especially for their use in STEM (e.g., coding, sciences, cryptography, medicine, etc.). They showcase the mind-blowing potential of what generative AI will be able to accomplish not-so-far down the road. That said, here at 1105 Media (parent company of PureAI, Converge360, TDWI and AI Boardroom) we're focused on how businesses can use generative AI technology today. With that in mind, our take on these very early preview models is this:

While you should definitely play around with o1 to see for yourself what it offers, for most non-STEM businesses, there's currently no need to switch end users or applications to the o1 models, especially if you will need to spend money to do so or if your applications aren't STEM-focused. Instead, give your developers access to o1-mini and then keep an eye on the many improvements that OpenAI adds to these preview models over the next weeks and months, possibly with a price reduction attached (OpenAI has lowered costs for some models in the past as they moved forward in maturity). We suspect these will make o1 worth a full-time switch fairly soon.

If you want to stay on top of what's happening with these (and other) generative AI models, consider attending some of our future events:

Are you doing something interesting, fun or unique with generative AI technologies in your workplace? Let the author know at [email protected].

About the Author

Becky Nagel serves as vice president of AI for 1105 Media specializing in developing media, events and training for companies around AI and generative AI technology. She also regularly writes and reports on AI news, and is the founding editor of PureAI.com. She's the author of "ChatGPT Prompt 101 Guide for Business Users" and other popular AI resources with a real-world business perspective. She regularly speaks, writes and develops content around AI, generative AI and other business tech. She has a background in Web technology and B2B enterprise technology journalism.