News

AI Can Help IT Security Teams Go on Offense: CSA

A new paper from the Cloud Security Alliance (CSA) details how IT teams can use AI to identify and address security weaknesses in their infrastructures.

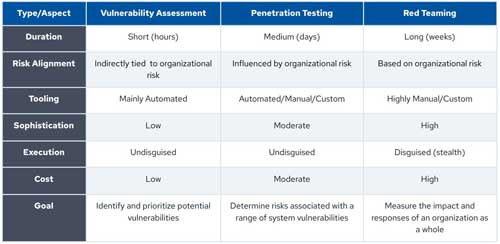

There are three ways AI can be integrated into cybersecurity approaches to make them more proactive, according to the "Using AI for Offensive Security" paper.

- Vulnerability assessment: can be used for the automated identification of weaknesses using scanners.

- Penetration testing: can be used to simulate cyberattacks in order to identify and exploit vulnerabilities.

- Red teaming: can be used to simulate a complex, multi-stage attack by a determined adversary, often to test an organization's detection and response capabilities.

Related practices are shown in this graphic:

[Click on image for larger view.]

Figure 1. Offensive Security Testing Practices. (Source: CSA)

[Click on image for larger view.]

Figure 1. Offensive Security Testing Practices. (Source: CSA)

CSA notes actual practices can differ based on various factors such as organizational maturity and risk tolerance.

A primary focus of the paper is the shift in cybersecurity caused by advanced AI such as large language models (LLMs) that power generative AI.

"This shift redefines AI from a narrow use case to a versatile and powerful general-purpose technology," said the paper, which details current security challenges and showcases AI's capabilities across five security phases:

- Reconnaissance - Reconnaissance represents the initial phase in any offensive security strategy, aiming to gather extensive data regarding the target's systems, networks, and organizational structure.

- Scanning - Scanning entails systematically examining identified systems to uncover critical details such as live hosts, open ports, running services, and the technologies employed, e.g., through fingerprinting to identify vulnerabilities.

- Vulnerability Analysis - Vulnerability analysis further identifies and prioritizes potential security weaknesses within systems, software, network configurations, and applications.

- Exploitation - Exploitation involves actively exploiting identified vulnerabilities to gain unauthorized access or escalate privileges within a system.

- Reporting - The reporting phase concludes the offensive security engagement by systematically compiling all findings into a detailed report.

"By adopting these AI use cases, security teams and their organizations can significantly enhance their defensive capabilities and secure a competitive edge in cybersecurity," the paper said.

The paper examines current challenges and limitations of offensive security, such as expanding attack surfaces, advanced threats and so on, and delves deeply into LLMs and advanced AI in the form of autonomous agents.

"An agent begins by breaking down the user request into actionable and prioritized plans (Planning). It then reasons with available information to choose appropriate tools or next steps (Reasoning). The LLM cannot execute tools, but attached systems execute the tool correspondingly (Execution) and collect the tool outputs. Then, the LLM interprets the tool output (Analysis) to decide on the next steps used to update the plan. This iterative process enables the agent to continue working cyclically until the user's request is resolved," the paper states.

Other topics include:

As far as what organizations can do to capitalize on advanced AI for offensive security, CSA provides these recommendations:

- AI Integration: Incorporate AI to automate tasks and augment human capabilities. Leverage AI for data analysis, tool orchestration, generating actionable insights and building autonomous systems where applicable. Adopt AI technologies in offensive security to stay ahead of evolving threats.

- Human Oversight: LLM-powered technologies are unpredictable, can hallucinate, and cause errors. Maintain human oversight to validate AI outputs, improve quality, and ensure technical advantage.

- Governance, Risk, and Compliance (GRC): Implement robust GRC frameworks and controls to ensure safe, secure, and ethical AI use.

"Offensive security must evolve with AI capabilities," CSA said in conclusion. "By adopting AI, training teams on its potential and risks, and fostering a culture of continuous improvement, organizations can significantly enhance their defensive capabilities and secure a competitive edge in cybersecurity."

AI-Powered Offensive Security Everywhere

Using AI to bolster offensive security efforts isn't a new concept, of course, as many organizations, pundits and sites have been discussing the topic for years. For example, the company Cobalt discussed the "Role of Generative AI in Offensive Security" early this year, pointing out AI also helps the bad guys: "Generative AI is introducing advanced methods for tackling cybersecurity. However, this technology not only empowers defenders to anticipate and counteract threats more effectively but also equips attackers with more sophisticated tools."

There's also an "Offensive AI Compilation" repo on GitHub, providing curated list of useful resources that cover offensive AI.

Offensive security was also a focal point of another recent survey-based report, this one from Bugcrowd and titled "2024 Inside the Mind of a CISO." That report examined "offensive security" CISOs and found that "There are many different paths a security professional can take to become a CISO, but this article is specifically interested in the offensive security path."

Bugcrowd's definition of the practice is: "Offensive security is a form of proactive security that comprises the constant process of identifying and fixing exploits before attackers can take advantage of them. Offensive security tactics include pen testing, red/purple teaming, bug bounty engagements, and vulnerability disclosure programs (VDPs). Offensive security should be part of a larger risk management strategy."

The study featured Jason Haddix, a former CISO and current CEO at Arcanum Information Security, who said, "I really think an offensive security practitioner is one of the best CISOs you can hire. They have a very unique idea of how to defend a program because they've been the attacker before."

What's more, he said, "It will never not be cool to do offensive security work. It's such a rush breaking into an organization and then helping fix it."

About the Author

David Ramel is an editor and writer at Converge 360.