News

OpenAI Built an AI To Catch ChatGPT's Hallucinations

OpenAI, steward of the widely used ChatGPT AI model, recently described how it developed another AI model to improve the accuracy of ChatGPT's outputs.

Dubbed "CriticGPT," the model is intended to complement ChatGPT code evaluations that are regularly conducted by paid human reviewers in a process called reinforcement learning from human feedback (RLHF).

RLHF is a necessary step in AI model training to ensure that the model adheres to its maker's standards regarding allowed behavior and output quality. However, noted OpenAI in a paper it shared late last month, traditional RLHF methods are "fundamentally limited by the capacity of humans to correctly evaluate model output."

Interestingly, the paper was co-authored by Jan Leike, a former leader of OpenAI's now-disbanded superintelligence research team. Leike resigned from OpenAI in May due to his concerns about OpenAI's approach to AI safety. He has since joined OpenAI competitor Anthropic.

RLHF requires reviewers to assess the quality of an AI's outputs based on several criteria, including accuracy and coherence. Reviewers are tasked with flagging mistakes in the AI's responses and writing critiques that provide context for those mistakes. As AI systems become more sophisticated and less prone to obvious errors, however, it becomes more challenging for reviewers to catch problems with any reliability.

"[A]s models become more capable they will soon reach the point at which even seasoned experts are unable to reliably assess the quality or correctness of their outputs," the paper's authors wrote. "This predicted deficiency of human evaluation is a fundamental limitation of RLHF."

OpenAI's solution is to create a "critic model" trained on GPT-4 to help human reviewers evaluate ChatGPT's output. This CriticGPT model was trained to accept a single ChatGPT prompt and response pair as a single input, and to generate a critique of that pair as its output. The aim of this critique is to flag mistakes in the initial ChatGPT response, using the initial prompt for context.

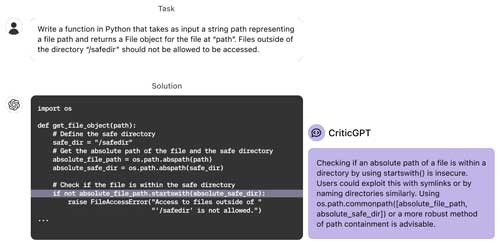

The below example shows CriticGPT being used to evaluate a prompt and response related to coding, a common use case for generative AI tools like ChatGPT:

[Click on image for larger view.]

CriticGPT identifies an error in ChatGPT's output and writes a critique explaining its reason for flagging it. (Source: OpenAI)

[Click on image for larger view.]

CriticGPT identifies an error in ChatGPT's output and writes a critique explaining its reason for flagging it. (Source: OpenAI)

In putting CriticGPT through its paces, OpenAI said it found the model identified more bugs than its paid human reviewers, even in ChatGPT responses that had been rated by reviewers as "flawless."

CriticGPT is not a replacement for human reviewers, however. OpenAI stressed that an AI trained to catch another AI's mistakes is not immune to hallucinations of its own. Moreover, CriticGPT has so far been trained only to assess very short ChatGPT responses; more complex responses may lower its reliability.

However, the company concluded, an AI evaluation process that combines both human and AI assessments is ultimately better than a process that uses only one or the other.

"CriticGPT's suggestions are not always correct, but we find that they can help trainers to catch many more problems with model-written answers than they would without AI help," OpenAI said in a blog post summarizing the paper's findings. "Additionally, when people use CriticGPT, the AI augments their skills, resulting in more comprehensive critiques than when people work alone, and fewer hallucinated bugs than when the model works alone."