News

The Next Cloud Wars Are Being Fought at the AI Hardware Front

Cloud giants Microsoft, Amazon Web Services and Google have found a new battleground: AI chips.

Witness to that is a barrage of new cloud AI chip announcements from the "Big 3" cloud giants, their second-tier challengers and hardware vendors willing to act as arms dealers for whatever cloud platform ponies up.

First, let's look at what those "Big 3" have been up to lately.

AWS

Amazon's cloud might have been caught flat-footed by Microsoft's OpenAI-powered onslaught of AI assistants or "copilots" being infused throughout its software and Azure platform -- and Google's near-panic "code red" response -- but the company has been playing some serious catch-up on multiple AI fronts.

AWS just last week announced "the next generation of two AWS-designed chip families -- AWS Graviton4 and AWS Trainium2 -- delivering advancements in price performance and energy efficiency for a broad range of customer workloads, including machine learning (ML) training and generative artificial intelligence (AI) applications."

[Click on image for larger view.] AWS Graviton4 and AWS Trainium2 (prototype) (source: AWS).

[Click on image for larger view.] AWS Graviton4 and AWS Trainium2 (prototype) (source: AWS).

The company said Graviton4 processors deliver up to 30 percent better compute performance, 50 percent more cores and 75 percent more memory bandwidth than the previous generation.

Trainium2 chips, meanwhile, are purpose-built for high-performance training of machine language foundation models (FMs) and LLMs with up to trillions of parameters.

Google

Just two days ago -- eight days after AWS' announcement -- the company announced Cloud TPU v5p, described as the company's most powerful, scalable, and flexible AI accelerator so far.

The company said new hardware was needed to address the "heightened requirements for training, tuning, and inference" for those ever-growing LLMs increasingly thirsty for compute resources. In this case the hardware is a TPU, or tensor processing unit, powerful custom-built processors built to run projects made on a specific framework. They join CPUs (central processing unit) and GPUs (graphics processing unit).

Wikipedia says the TPU is an AI accelerator application-specific integrated circuit (ASIC) developed by Google for neural network machine learning, using the company's own TensorFlow software.

Like AWS, Google noted those ever-growing LLMs as the basis for its advanced hardware efforts. "Today's larger models, featuring hundreds of billions or even trillions of parameters, require extensive training periods, sometimes spanning months, even on the most specialized systems," the company said. "Additionally, efficient AI workload management necessitates a coherently integrated AI stack consisting of optimized compute, storage, networking, software and development frameworks."

Google noted that TPUs have been used for training and serving its various AI-powered products including YouTube, Gmail, Google Maps, Google Play and Android, and were also used to train, and now serve up, its latest/greatest LLM, the just-unveiled Gemini.

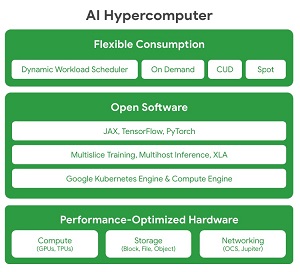

What's more, the new TPU is used to power the company's brand-new AI Hypercomputer, described as "a system of technologies optimized to work in concert to enable modern AI workloads."

[Click on image for larger view.] AI Hypercomputer (source: Google).

[Click on image for larger view.] AI Hypercomputer (source: Google).

"AI Hypercomputer enables developers to access our performance-optimized hardware through the use of open software to tune, manage, and dynamically orchestrate AI training and inference workloads on top of performance-optimized AI hardware," the company said.

Microsoft

Just a couple weeks before Google's announcement, Microsoft at its Ignite event announced its own cloud AI chip offerings, specifically custom-designed chips and integrated systems. First is the Microsoft Azure Maia AI Accelerator, optimized for AI tasks and generative AI. Second is the Microsoft Azure Cobalt CPU, an Arm-based processor tailored to run general-purpose compute workloads on the Microsoft Cloud. (The term "Microsoft Cloud" refers to a broader platform that includes Azure and services like Microsoft 365 and Dynamics 365.)

The Maia 100 AI Accelerator was designed specifically for the Azure hardware stack and was created with help from OpenAI, creator of ChatGPT and the tech powering Microsoft copilots.

"Since first partnering with Microsoft, we've collaborated to co-design Azure's AI infrastructure at every layer for our models and unprecedented training needs," said Sam Altman, CEO of OpenAI. "We were excited when Microsoft first shared their designs for the Maia chip, and we've worked together to refine and test it with our models. Azure's end-to-end AI architecture, now optimized down to the silicon with Maia, paves the way for training more capable models and making those models cheaper for our customers."

[Click on image for larger view.] First Servers Powered by the Microsoft Azure Cobalt 100 CPU (source: Microsoft).

[Click on image for larger view.] First Servers Powered by the Microsoft Azure Cobalt 100 CPU (source: Microsoft).

The Cobalt 100 CPU, meanwhile, is built on Arm architecture, a type of energy-efficient chip design optimized to deliver greater efficiency and performance in low-powered devices like smartphones and cloud-native offerings where power efficiency is crucial.

[Click on image for larger view.] Custom-Built Rack for the Maia 100 AI Accelerator (source: Microsoft).

[Click on image for larger view.] Custom-Built Rack for the Maia 100 AI Accelerator (source: Microsoft).

"The architecture and implementation is designed with power efficiency in mind," said exec Wes McCullough. "We're making the most efficient use of the transistors on the silicon. Multiply those efficiency gains in servers across all our datacenters, it adds up to a pretty big number."

And More

While the above details recent AI chip announcements from the "Big 3" made in just the past few weeks, there is plenty more buzz in the space.

Exactly a week ago, for example, Google announced a partnership with another major AI industry player, Anthropic, that will see that company leveraging Google's Cloud TPU v5e chips for AI inference.

And IBM, trying to break in to the upper echelon of cloud hyperscalers populated by AWS, Azure and Google Cloud, recently announced efforts surrounding a new type of digital AI chip for neural inference called NorthPole. It extends work done for an existing brain-inspired chip project called TrueNorth.

IBM said the first promising set of results from NorthPole chips were recently published in Science.

Meanwhile Broadcom about a week ago announced what it called the "industry's first switch with on-chip neural network."

Specifically, the company unveiled an inference engine called NetGNT (Networking General-purpose Neural-network Traffic-analyzer) in its new, software-programmable Trident 5-X12 chip. "NetGNT works in parallel to augment the standard packet-processing pipeline," IBM said. "The standard pipeline is one-packet/one-path, meaning that it looks at one packet as it takes a specific path through the chip's ports and buffers. NetGNT, in contrast, is an ML inference engine and can be trained to look for different types of traffic patterns that span the entire chip."

And, while Nvidia has lorded over the AI chip space so far, competitors like AMD have also been active, with that company just this week announcing its AMD Instinct MI300 Series or accelerators "powering the growth of AI and HPC at Scale."

Like the others, AMD emphasized its new wares were designed to address the tremendous resources required by today's AI projects.

"AI applications require significant computing speed at lower precisions with big memory to scale generative AI, train models and make predictions, while HPC applications need computing power at higher precisions and resources that can handle large amounts of data and execute complex simulations required by scientific discoveries, weather forecasting, and other data-intensive workloads," the company said.

And that quote pretty much sums up the reason for the tremendous amount of activity surrounding AI chips in the cloud. And we didn't even touch upon Nvidia, the consensus leader in the space (see "AWS, Fueled by Nvidia, Gets In on AI Compute Wars").

If all of the above happened in just the last month or so, imagine what's coming in 2024.

Well, research firm Gartner already has, forecasting in August that worldwide AI chip revenue would reach $53 billion this year and would more than double by 2027.

"The need for efficient and optimized designs to support cost effective execution of AI-based workloads will result in an increase in deployments of custom-designed AI chips," the firm said. "For many organizations, large scale deployments of custom AI chips will replace the current predominant chip architecture -- discrete GPUs -- for a wide range of AI-based workloads, especially those based on generative AI techniques."

Note that the firm's research isn't cloud-centric, but the cloud is where much of the AI hardware advancements are playing out.

Gartner concluded: "Generative AI is also driving demand for high-performance computing systems for development and deployment, with many vendors offering high performance GPU-based systems and networking equipment seeing significant near-term benefits. In the long term, as the hyperscalers look for efficient and cost-effective ways to deploy these applications, Gartner expects an increase in their use of custom-designed AI chips. "

About the Author

David Ramel is an editor and writer at Converge 360.