How-To

Researchers Demonstrate an AI Language Generalization Breakthrough

- By Pure AI Editors

- 11/06/2023

In spite of the amazing capabilities of language-based AI systems like ChatGPT, such systems have not shown they can exhibit "systematic generalization" -- until now. In October 2023, researchers Brendan Lake and Marco Baroni published an article titled "Human-like Systematic Generalization Through a Meta-Learning Neural Network," which describes what may be a significant breakthrough in AI.

Systematic Generalization

Systematic generalization in humans is the ability to more or less automatically use newly acquired words in new scenarios. For example, humans who learn the meaning of a new (to them) word such as "gaslighting" (psychological manipulation that instills self-doubt in a person), can use the new word in many contexts. And the new word can even be applied to unusual scenarios such as, "My dog was gaslighting my cat this morning about sleeping on the couch."

On the other hand, a large language model chat application such as ChatGPT can only use the term gaslighting effectively if the system has been exposed to the term many times during training. This is likely the case, but is not guaranteed for many words and phrases. AI researchers have debated for decades whether neural network systems can model human cognition if the systems cannot demonstrate systematic generalization.

The Lake and Baroni research results show how a standard neural network sequence-to-sequence architecture that has been optimized for compositional skills, can mimic human systematic generalization.

An Example

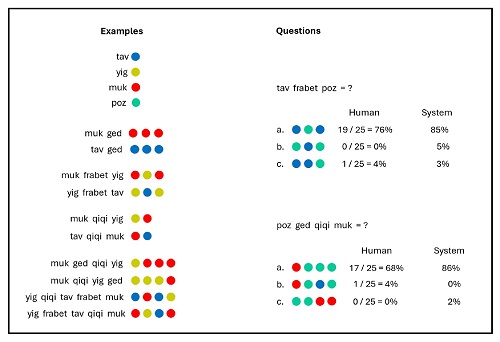

In one set of experiments, 25 human subjects were given a set of 14 examples. Each example consisted of an input of nonsense words and an abstract output made of colored dots. See Figure 1 for one possible example (which was not used in the study).

[Click on image for larger view.] Figure 1: There are 14 examples on the left and two challenge problems on the right.

[Click on image for larger view.] Figure 1: There are 14 examples on the left and two challenge problems on the right.

In Figure 1, there are four example primitives: tav = blue, yig = olive, muk = red and poz = green. There are 10 example functions. For example, "muk frabet yig" = red olive red. Based just on this information, a human should be able to determine the output for "tav frabet poz."

If frabet is interpreted as a function, and muk and yig are interpreted as arguments, the example function can be thought of as frabet(muk, yig) = muk-color yig-color muk-color = red olive red. Put another way, the frabet function accepts two arguments and emits three colors, where the first argument color is in position 1 and 3, and the second argument color is in position 2. Therefore, "tav frabet poz" = tav poz tav = blue green blue.

In this experiment, 19 out of 25 humans were correct (76 percent accuracy) and the neural system trained for composition skills achieved 85 percent accuracy. A system like ChatGPT would likely score roughly only 5 percent accuracy, at best, on such a problem.

The Pure AI Experts Weigh In

The Pure AI editors talked to Dr. Paul Smolensky and Dr. James McCaffrey to learn more about the issue. Smolensky is a famous cognitive science researcher who specializes in language at Johns Hopkins University. McCaffrey is a well-known AI and machine learning scientist engineer at Microsoft Research.

Smolensky noted, "Since the 1980s, many researchers have doubted that neural networks could master the ability to freely recombine knowledge of parts to produce knowledge of the wholes built from a novel combination of those parts."

He added, "This new study by Lake and Baroni shows, for the first time, that neural networks can be trained to have a level of compositional generalization that is comparable to humans. Their training method, if scaled up to train large language models, can be expected to improve the compositional generalization capacities of those models, which has been persistently problematic."

McCaffrey agreed: "The question of systematic compositional generalization has been a key point of debate for decades. The Lake and Baroni paper, while not absolutely definitive, strongly suggests that the current generation of large language model systems can be significantly improved in a relatively straightforward way."

McCaffrey added, "The speed at which AI research is advancing is quite astonishing. The systems that are widely available today are a giant leap better than those of only 12 months ago. And the systems that will be available 12 months from now will likely be another giant leap forward in capability."