In-Depth

Researchers Make Computer Chess Programs More Human

For humans, playing against a strong chess program is no fun at all because there is virtually no chance of winning.

- By Pure AI Editors

- 09/06/2022

In some machine learning scenarios, it's useful to make a prediction system that is more human rather than more accurate. The Maia chess program is designed to do just that.

There is a long and fascinating history of the relationship between chess, computer science and artificial intelligence (AI). The first computer programs capable of playing chess appeared in the late 1950s. In 1997, the Deep Blue program defeated Garry Kasparov, who was the current world chess champion.

Traditional chess programs such as Stockfish reached superhuman levels of performance about 10 years ago. In 2017, the AlphaZero chess program, based on just nine hours of deep reinforcement learning, stunned the chess and research worlds by beating Stockfish in a 100-game match by a score of 28 wins, 0 losses and 72 draws. In 2018, the Leela chess program, based on AlphaZero, was released as an open source project.

For humans, playing against a strong chess program is no fun at all because there is virtually no chance of winning. It is possible to attenuate the strength of a chess program by limiting how many moves it is allowed to look ahead. But playing against such attenuated programs just doesn't feel like playing against a human competitor.

Researchers have explored the idea of using machine learning to create chess programs that play more like humans. The research paper "Aligning Superhuman AI with Human Behavior: Chess as a Model System" by R. McIlroy et al. describes a chess program named Maia. The paper can be found in several places on the internet using any search engine. In a given chess position, Maia is trained to play the most human-like move rather than the best possible move.

The Maia Chess Programs

There are nine different versions of Maia: Maia 1100, 1200, 1300, 1400, 1500, 1600, 1700, 1800 and 1900. The Maia 1100 program is designed to play like a human player who is rated 1100-1199 (beginner). Maia 1500 is designed to play like a human who is rated 1500-1599, which is typical club player strength. Maia 1900 is designed to play like human who is rated 1900-1999, which is expert level but not master strength.

[Click on image for larger view.] Figure 1: The Maia5 Bot (Maia 1500) Playing Chess Against a Human

[Click on image for larger view.] Figure 1: The Maia5 Bot (Maia 1500) Playing Chess Against a Human

To train a Maia version, 10,000 games played between two human players both rated in the appropriate range were downloaded from the popular lichess.com Web site. The lichess site has more than 1 billion chess games, with about 2 million games played per day.

For each of these 10,000 games, the first 10 moves were discarded because players often use memorized opening moves. Moves towards the end of a game were discarded if a player had less than 30 seconds of time because such moves are somewhat random and made only to prevent losing on time, which generates many blunders. The result was nine test datasets, each with approximately 500,000 positions.

Maia is based on the Leela chess program. But instead of trying to find the best possible move in a chess position, Maia uses the training data to find a move that is likely to be played by a human. Note that it's not possible to store every possible chess position. It's estimated there are at least 10^120 chess positions, which is a number that is vastly larger than the estimated number of atoms in the known universe, about 10^82.

Three of the nine Maia chess programs (Maia 1100, 1500 and 1900) have been uploaded to the lichess.com site. The screenshot in Figure 1 shows an example where a human expert is playing the Maia 1500 program. The human offered a knight sacrifice, which the Maia bot unwisely accepted, just as most human opponents would. But a few moves later, the human player had a crushing attack and checkmated the Maia bot a few moves later. A strong chess program would have computed the consequences of accepting the knight sacrifice and made a safer move.

Predicting Moves and Predicting Errors

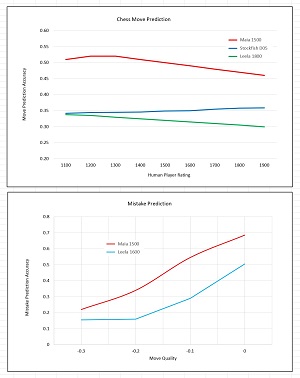

For a given chess position, a Maia program correctly predicts the move that would be made by a human player about 50 percent of the time. See the top graph in Figure 2. The graph shows that the Maia 1500 program predicts human moves significantly better than the Stockfish D05 and Leela 1800 programs. Stockfish D05 is a version of Stockfish that is attenuated to look only five moves ahead (typical of a human player rated between 1500 and 1600). Leela 1800 is a version of Leela that is attenuated so that its playing strength is roughly equivalent to a human player rated about 1800. The Stockfish D05 and Leela 1800 programs predict the move that would be made by a human player about 35 percent of the time.

[Click on image for larger view.] Figure 2: The Maia Programs Predict Human Chess Moves and Human Errors

[Click on image for larger view.] Figure 2: The Maia Programs Predict Human Chess Moves and Human Errors

For a given chess position, a Maia program also predicts human mistakes more accurately than standard chess programs. The bottom graph in Figure 2 shows that for moderate mistakes, Maia 1500 predicts with about 35 percent accuracy while the Leela 1600 program predicts with about 15 percent accuracy. The implication is that, as intended, Maia programs play more like humans than chess programs that are optimized to find the best move.

What's the Point?

The Pure AI editors spoke with Dr. James McCaffrey from Microsoft Research, who works with machine learning and is also an expert-rated chess player. "The idea of creating machine learning models that are more human rather than more accurate has interesting potential applications," McCaffrey said. "Imagine a pilot flight training scenario. Flight simulators collect huge amounts of information. A system that predicts pilot errors could be extremely valuable."

McCaffrey added, "Robotics is another scenario where a more human-like system could be useful. Imagine an industrial setting where humans and robots work together. A robot that is trained to be more human rather than more accurate could be safer and give an overall system that is more efficient."